SAP Datasphere - Data Integration - Part 2 - Data Integration based on Data Flows and External Sources

Data Integration based on Data Flows:

A Data Flow is used as per SAP Definition “to move and transform data in an intuitive graphical interface”. The Data Flow is created within the part of the Data Builder in SAP Datasphere, and it currently supports the following operators to be used within your transformation process:

- Join Operator

- Union Operator

- Projection Operator

- Aggregation Operator

- Script Operator

As we are coming from a strong Business Warehouse background, we are always comparing things to the past. Which in our case would be a classical SAP BW on HANA or SAP BW/4HANA. We currently see the Data Flow as a substitute for what used to be in a BW system the transformation.

In the data flows, we will be able to use a series of standard transformations without the need of programming knowledge and in a graphic way, but we also have the possibility of creating transformations based on scripts. In a data flow, we will use views or tables that we may already have in our SAP Datasphere or use the connections to get data from other systems, in that case we should first create all the necessary connections in our space.

Steps to be followed to create a Data Flow:

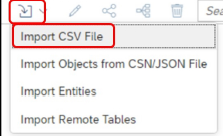

- Create Connection

- Build Data Flow

- Execute / Schedule Data Flow

- Data Modeling / Story Creation

As part of Standard transformations, we would combine data sets with no code operators for Projection, Aggregation, Join, Filter, Union, etc. The data sources in this can be tables, ABAP CDS views, OData, Remote Files (JSON, Excel, CSV, ORC).

As part of scripting, we can do advanced transformation requirements like extraction of texts using the dataflow. Python 3 language is supported in this case. Insert a script operator to transform incoming data with a Python script and output structured data to the next operator. The script operator receives the data from a previous operator. You can provide transformation logic as a body of the transform function in the script property of the operator. Incoming data is fed into the data parameter of the transform function and the result from this function is returned to the output.

Data Integration based on External Sources:

Comments

Post a Comment